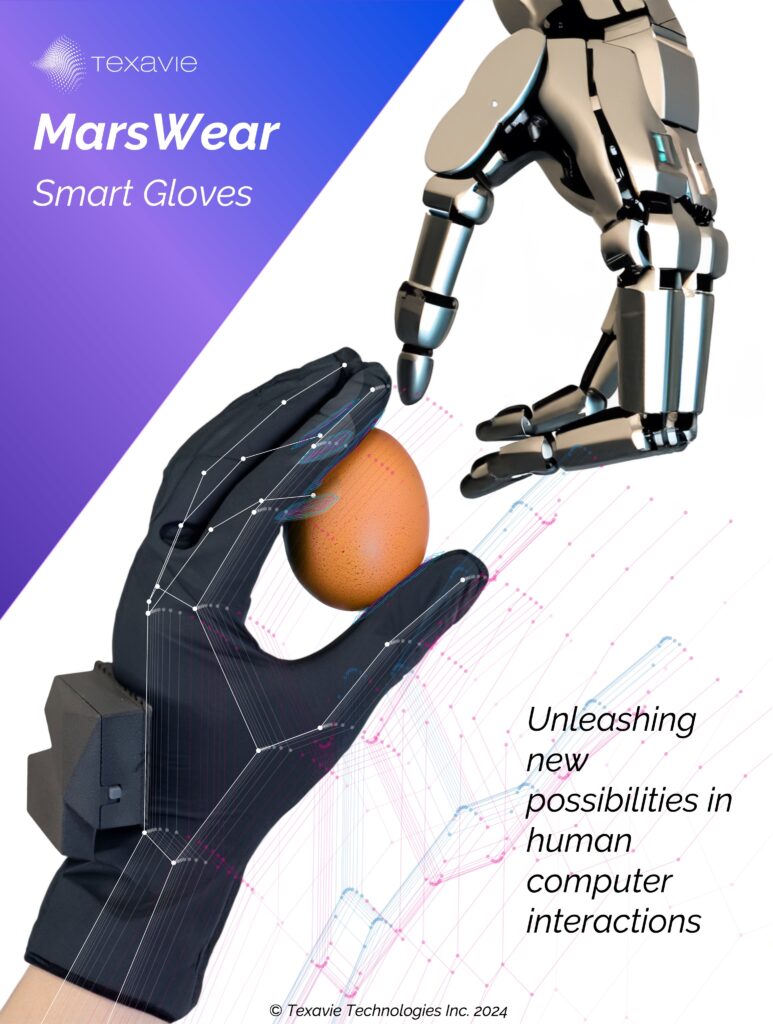

Thrilled to announce our recent publication in Nature Machine Intelligence on our MarsWear Smart Gloves, in collaboration with the Flexible Electronics and Energy Lab and Prof. Janice Eng of Department of Physical Therapy of the Faculty of Medicine at the University of British Columbia!

You can read the full article here: https://www.nature.com/articles/s42256-023-00780-9

In this groundbreaking study, we introduce washable and stretchable smart textile gloves capable of accurately capturing hand and finger movements, along with grasp forces during interaction with physical objects. Notably, we achieve an unprecedented average joint angle accuracy of 1.4 degrees for various subjects, rivaling the performance of expensive motion capture cameras. What sets our innovation apart is its versatility for being used anywhere, eliminating limitations related to camera field of view, occlusion, background noise, and multi-user complexities. This outstanding performance is achieved through precise multimodal sensing of stretch and pressure during hand movements, utilizing our stretchable sensor yarns embedded in the gloves, in conjunction with inertial measurement units (IMUs) and sophisticated machine learning algorithms.

For the first time, we showcase complex multi-finger real-time typing on any surface, detected through dynamic hand poses and surface-tapping pressure.

Additionally, we present the groundbreaking recognition of 100 static and dynamic gestures, adapted from American Sign Language (ASL), demonstrating the potential for fluid and accurate real-time translation.

Our research also marks the accurate capturing of hand pose and grasp forces during interaction with various objects, illustrating the potential for applications that bridge the realms of the real and virtual worlds.

This work promises to push the boundaries of complex human-computer interactions, remote movement assessment and therapy, robotic control, and metaverse applications that connect the real and virtual worlds.

To learn more, and for inquiries and partnerships please contact us at: info@texavie.com !

You can read the news coverage for this work at: